Multi-agent based software development

Researcher and developer

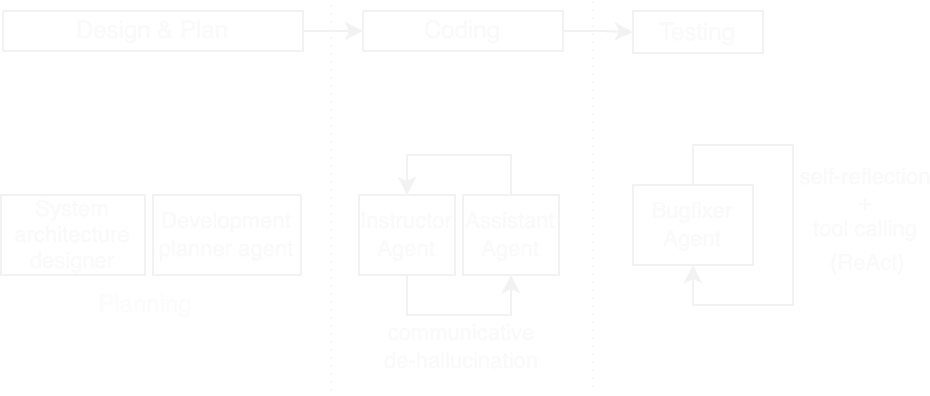

Software development requires cooperation among people with diverse skills and typically includes design, coding, and testing stages. Past deep learning techniques often needed custom designs and were ineffective for software development's non-deterministic behavior. Multi-agent LLM-powered systems communicate through multi-turn dialogues.

In this project I develop a multi-agent system for software development using large language models (LLMs). The system is composed of three subsystems:

-

System designer and planner: responsible for dividing the development process into smaller subtasks or work-packages. Uses chain-of-thought and few-shot learning.

-

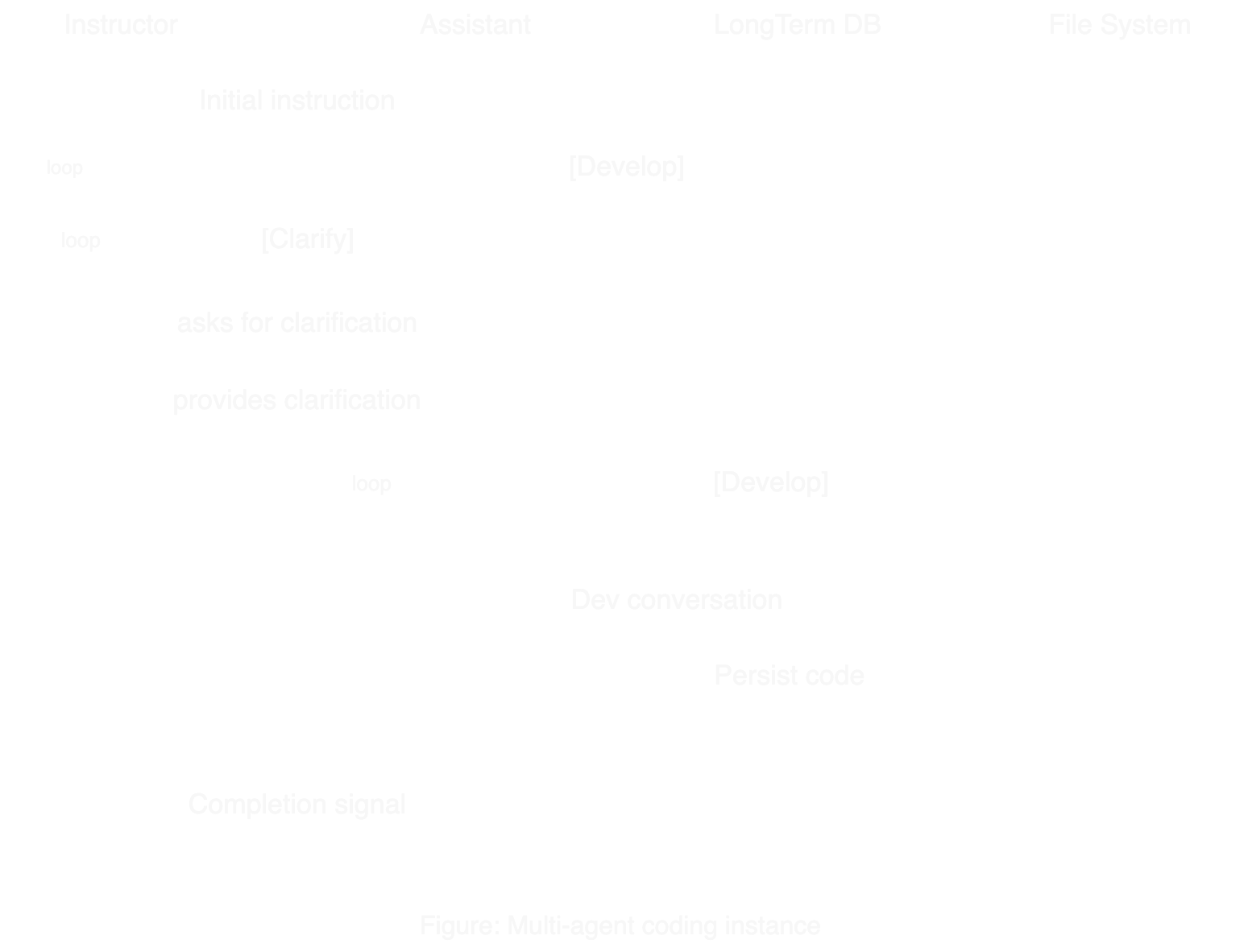

Coding system: The coding system is composed of an instructor and an assistant agent that communicate through multi-turn dialogues. Uses communicative dehallucination and repeated-instruction mechanism Communicative dehallucination mechanism which consists on two agents, one instructor and one assistant in which the coding assistant asks for clarifications before giving a final response.

-

Bugfixer system: The bugfixer system is an agent that combines Reflection, ReAct pattern, Chain-of-thought and Few-shot learning to fix bugs. Function calling allow for interaction with docker for running complete microservices stacks, reading, writing files and providing context.

Due to the stochastic answers provided by LLMs an auxiliary agent called information extractor is proposed. Consist of a multi-step ReAct agent capable of more precise extraction of pieces of code and/or text.

All agents have access to long-term database for inter-stage communication and use short-term memory for conversation history.

The system is implemented using multi-threading and publish-subscribe communication pattern for inter-agent communication.

The system uses metrics such as executability, consistency, completeness, and quality to evaluate the generated software code.

Evaluating the generated software is challenging. Typical function-oriented metrics like pass@k do not comprehensively evaluate entire software systems. I proposed three metrics:

- Executability: evaluates how well software can operate correctly within a compilation environment

- Consistency: evaluates how well the generated software code matches the original requirement by using similarity on embeddings;

- Completeness: quantifies the percentage of software without placeholder code.

- Quality is a comprehensive metric that combines the previous factors as a metric of overall quality.

Multi-agent coding submodule

This applies for one work-package or subpackage at a time. Multiple instances are spawned as required.

Bugfixer submodule

Planning submodule

Makes use of 3 sub-systems:

- System designer: single-round configuration.

- TechPlanner: single-round configuration.

- Information Extracter: multi-round configuration, with memory.

Techniques used:

- Chain of thought.

- Few-shot prompting.

Conclusions

- Multi-agent techniques as well as advanced prompting are key to the solution of complex problems with LLMs.

- Information extractors, dehallucination and repeated instruction mechanism greatly help for more deterministic workflows using LLMs.

- More research is required for the definition of useful metrics for generated code.